Twice per year, the American Mathematical Society (AMS) and the Mathematical Sciences Research Institute (MSRI) jointly sponsor a Congressional briefing.[1]

These briefings provide an opportunity for communicating information to policymakers and, in particular, for the mathematics community to tell compelling stories of how our federal investment in basic research in mathematics and the sciences pays off for American taxpayers and helps our nation maintain its place as the world leader in innovation. Attendees on 28 June 2017 included Congressional staffers and representatives from the NSF Division of Mathematical Sciences, and Senate Minority Leader Charles Schumer and House Minority Leader Nancy Pelosi dropped in. David Eisenbud (MSRI), Karen Saxe (AMS), Anita Benjamin (AMS), and Kirsten Bohl (MSRI) organized this event. Presenter David Donoho has kindly shared these notes for his Congressional briefing. It is notable that the mathematicians had little interest in applications, yet the math inspired original MRI research and applications.

—Karen Saxe, Director, AMS Washington Office, and David Eisenbud, Director, MSRI

1. Background

MRI scans are crucial tools in modern medicine: 40 million scans are performed yearly in the US. Individual 2-D MR images portray soft tissues that X-ray CT can’t resolve, without the radiation damage that X-rays produce. MRIs are essential in some fields, for example to neurologists seeking to pinpoint brain tumors or study demyelinating diseases and dementia.

MRI technology is remarkably flexible, constantly spawning new applications. Dynamic MRIs allow cardiologists to view movies showing muscular contractions of the beating heart. 3-D head MRIs allow neurosurgeons to meticulously plan life-and-death brain surgeries, in effect to conduct virtual fly-throughs ahead of time.

Traditionally MRIs required lengthy patient immobilization—in some cases hours. Long scan times limit the number of patients who can benefit from MRI, and increase the cost of individual MRIs. Long scan times also make it difficult to serve fidgety children. Ambitious variations of MR imaging—such as dynamic cardiac imaging and 3-D MRI—require far longer scan times than simple 2-D imaging; such long scan times have typically been awkward or even prohibitive. Yet patients with arrhythmias and afib could get better treatment based on dynamic cardiac imaging; patients with aggressive prostate cancer could get much more accurate biopsies under 3-D MRI guidance; and many neurosurgeries could be much safer and more effective if surgeons could plan surgeries with 3-D MRIbased fly-throughs beforehand.

Help is on the way: inspired by federally funded mathematical sciences research, patients everywhere will soon complete traditional clinical MRI scans much more rapidly. Ambitious but previously rarely available MRI applications will go mainstream.

2. Accelerated Imaging by Compressed Sensing

In 2017, the FDA approved two new MRI devices, which dramatically speed up important MRI applications from 8x to 16x. Siemens’ technology (CS Cardiac Cine) allows movies of the beating heart; GE’s technology (HyperSense) allows rapid 3-D imaging, for example of the brain. Both manufacturers say their product is using Compressed Sensing (CS), a technology that first arose in the academic literature of applied mathematics and information theory.

Roughly ten years ago, mathematicians, in-

cluding Emmanuel Can-dès, now at Stanford, Terence Tao of UCLA, and me, put forward theorems showing that compressed sensing could reduce the number of measurements needed to reconstruct certain signals and images. Partly inspired by these new mathematical results, MRI researchers such as Michael Lustig (now at Berkeley) and collaborators in John Pauly’s lab at Stanford began to work intensively on new scanning protocols and algorithms. Compressed-sensing-inspired imaging became in the next few years a leading theme at international conferences of MRI researchers. Convincing clinical evidence soon emerged. Shreyas Vasanawala at Lucille Packard Children’s Hospital (Stanford) and co-authors (including Lustig and Pauly) showed in 2009 that pediatric MRI scan times could be reduced in representative tasks from 8 minutes to 70 seconds, while preserving the diagnostic quality of images. Fidgety children could thus be imaged successfully and comfortably with far less frequent use of sedation. Other researchers demonstrated impressive speedups in dynamic heart imaging in the clinical setting. Manufacturers ultimately became convinced; serious commercial development followed, leading to 2017’s FDA bioequivalence approvals.

Researchers at GE and Siemens tell me that accelerated imaging can be expected to spread broadly to many other MRI settings. The industry welcomes the new approach to accelerate MRI scans, and is seeking to deploy it where possible.

Transforming the MR industry takes time. In each proposed application, the FDA must first certify that accelerated imaging is bioequivalent to traditional imaging: that it really can produce diagnostic quality images in less scan time. Seeking FDA approval demands a rigorous multi-step evaluation taking years. From this viewpoint, it seems stunning that compressed-sensing inspired products are now on the market, only about a decade after the initial academic journal articles. This is certainly a testament to the energy and talents of MRI researchers and developers, and also a sign that the underlying mathematics was solid, reliable, and applicable.

The technology remains to be deployed into hospitals and clinics. More than 5 billion USD in MR scanners are sold annually; service and maintenance costs add billions more. There are tens of thousands of MRI scanners installed in the US currently, and many more worldwide. Improving MRI, by whatever means—mathematical ideas like compressed sensing, or physical ingredients like more powerful magnets—is always a gradual process of getting new equipment into the marketplace or retrofitting existing scanners.

For patients, such improvements can’t come soon enough. My own son is a neurosurgery resident at a large county hospital that serves many indigent and uninsured patients. He treats dramatic injuries to the head, from gunshot wounds, blunt force trauma, and car wrecks; but also less dramatic yet serious problems like aneurysms and brain tumors. In his hospital, which does not yet have the accelerated MRI technology I’ve been speaking about, lengthy delays for MRIs are common; sometimes he must open the patient’s cranium without any MRI at all. In other cases, he only can inspect a few 2-D slices, rather than a full 3-D image.

My son’s comment about accelerating MRIs: “Faster, please.”

3. The Role of Mathematics Research

NSF-funded Mathematical Sciences research, and NIH funding of cognate disciplines played a key role in these developments.

First: how can mathematics be helpful? Don’t the MRI researchers have all the equipment they need to simply do whatever they need, and then see experimentally if it works?

The answer is interesting. Mathematicians build formal models of systems and then derive logical consequences of those formal models. Whatever mathematicians discover about such models is logically certain. In everyday experience, almost anything can be doubted, nothing is as it seems, whatever can go In mathematics, wrong will go wrong. In mathematics, a true statement ment is true, full stop. If a mathematical theorem says something surprising or impressive, you don’t waste time doubting the messenger—you just read the proof and (if you have the background) you will see why the theorem is true.

Mathematical results can be transformative when applied to a phenomenon in the real world that is poorly understood and as a result controversial. And in the case of accelerated scanning, that was the case. Prior to the mathematical work I mentioned above, isolated projects had observed experimentally that in special circumstances, researchers obtained impressive speed improvements over traditional MRI scanning. However, the MRI community as a whole was uncertain about the scope of such isolated results, and the impact of the published experimental evidence was therefore limited.

Mathematical research can go farther and deeper than experiments ever will go. It can give guarantees that certain outcomes will always result or that certain outcomes will never result. When you first hear of such a guarantee, it can rock your world.

Early mathematical results about compressed sensing guaranteed imaging speedups under certain conditions. This got the attention of MRI researchers and inspired some, such as Michael Lustig, to go all in on compressed sensing. Eventually, the mathematical certainty offered by the guarantees and their breadth broke the apparent ‘logjam’ of hesitation and suspicion that would have otherwise greeted MRI. Compressed sensing became a hot topic in MRI research.

Mathematics can also be a floodlight illuminating clearly the poorly understood path ahead. MRI researchers have always wanted to accelerate MRI; they just didn’t see how to do it. Mathematicians proposed new algorithms and gave persuasive guarantees based on illuminating principles.

4. Some Mathematics

I’ll mention very briefly some mathematical ideas that were mobilized to study compressed sensing.

At its heart, compressed sensing (CS) proposes that we take seemingly too few measurements of an object— so from the given data there are many possible reconstructions. CS selects from among the many possible candidates the one minimizing the so-called Manhattan distance[2]. Mathematics guarantees that the optimizing reconstruction (under conditions) is exactly the one we want.

The bridge between imaging and mathematics is produced by mathematical analysis of convex optimization algorithms that shows that the following statements are equivalent.

• Consider 100,000 random measurements of a 1000 by 1000 image. Suppose the underlying image has only 10,000 nonzero wavelet coefficients. With overwhelming probability this image can be reconstructed by minimizing the Manhattan distance of its wavelet coefficients.

• Consider a random 900,000-dimensional linear subspace of 1,000,000-dimensional Euclidean space. The chance that this slices into a certain regular simplicial cone with 10,000-dimensional apex is negligible.

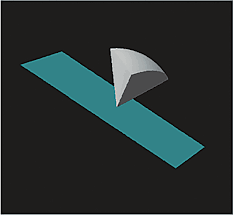

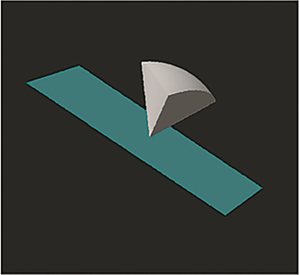

The mathematical heart of the matter is thus the following geometric probability problem. We are in an N-dimensional Euclidean space, N large. We consider a convex cone K with its apex at zero, and sample an M-dimensional random linear subspace L. What is the probability that L intersects K? (See Figure 1.)

Want to add a caption to this image? Click the Settings icon.

Figure 1. The underlying mathematics asks for the probability that a high-dimensional cone intersects a high-dimensional plane.

As it turns out, this probability depends on the cone K, and on the dimensions M and N—let’s denote it P(K; M,N). The central surprise of compressed sensing is that, for the cones K we are interested in, we can have P(K; M,N) essentially zero, even when M <1, for the regions in question.

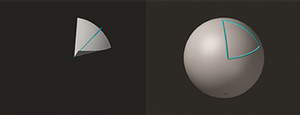

In the 1960s two papers by federally-funded US university professors invented key tools to attack our problem. Harold Ruben, then at Columbia, generalized Gauss’s formula for spherical triangles in dimension 3 to all higher dimensions, obtaining general formulas for the spherical volume of high-dimensional spherical simplices. Branko Grünbaum at University of Washington formalized the

cone intersection probability P(K; M,N) in the case K>1 and developed some fundamental formulas for P(K; N,M).

Figure 2. The probability that a line intersects a cone is given by the area of the corresponding region on the surface of the sphere. When R is a spherical triangle, the great mathematician Gauss found a beautiful formula for area: simply the sum of the three internal angles, minus π. This formula is easy to understand but conceals a surprising wealth of intellectual meaning.

In the 1990s, German geometric probabilist Rolf Schneider and Russian probabilist Anatoly Vershik showed how to use Ruben’s and Grünbaum’s formulas to compute P(K’; M,N) for some interesting cones K’. In effect, they showed that (Ruben’s generalization of) Gauss’s charming formula was the heart of the matter; everything reduced to the computation of volumes of spherical simplices.

By the mid 2000s several approaches were developed to show that the central miracle of compressed sensing extended over a wide range of combinations of M and N; Candès and Tao (mentioned above) had developed by other means upper bounds on P(K; M,N), establishing that there was a useful such range. NSF-funded postdoc Jared Tanner (now at Oxford) worked with me to apply geometric tools of Schneider, Vershik, and Ruben to the cones K of interest. We developed precise formulas revealing the precise number of measurements needed for exact reconstruction.

Since then, slick machinery was developed by two teams at Caltech, Oymak/Hassibi and Amelunxen/McCoy/Tropp, to get these and many other results.[3]

5. The Role of Federal Funding

The success story of compressed sensing is a testament to federal funding. Federal funding of pure and applied science has endowed the United States with research universities that are the envy of the world. Federal funding of mathematical sciences has led to amazing collections of sophisticated mathematical talent within those great universities, housed in departments of mathematics, applied mathematics and statistics, electrical and systems engineering and computer science, and even biology and chemistry.

These great institutions attract terrifically talented young people from around the world to come here to learn and become part of our technical infrastructure, strengthening the next generation of US science and industry.

Federal funding enabled the breakthroughs of compressed sensing along three paths:

• Federal funding enabled basic research in high-dimensional geometry, which is at the heart of compressed sensing. Harold Ruben and Branko Grünbaum were housed in statistics and mathematics departments at US universities when they did their foundational work.

• Federal funding enabled cross-disciplinary work that identified the key questions for mathematicians to resolve. In the late 1990s, NSF funded a joint project between optimization specialists Stephen Boyd and Michael Saunders and myself at Stanford, which studied the Manhattan metric for important data processing problems. Prior to this project, most scientists used the Crow Flight metric instead of the Manhattan metric.[4] That project and its sequels funded work by Xiaoming Huo (now at The Georgia Technical Institute), Michael Elad (now at Technion), and myself that proved mathematically that the Manhattan metric could pick out the unique correct answer—when the answer we are seeking had a very strong mathematical sparsity property.

• Federal funding enabled focused research to go far deeper into this surprising area and dramatically weaken the required sparsity, for example by using random measurements. Researchers Emmanuel Candès (then at California Institute of Technology) and Terry Tao (University of California—Los Angeles), and myself and many others all were supported in some way by NSF to intensively study random measurements.

This is above all a case where the federal research funding system has worked. NSF support of mathematics and statistics and NIH support of electrical engineering and radiology have really delivered. The research universities, such as Stanford University, California Technical Institute, University of California—Los Angeles, and University of California—Berkeley, to mention only a few, have really delivered. As we have seen, industrial research groups at Siemens and GE have responded enthusiastically to the initial academic research breakthroughs.

Enabling the rapid transition were the great students and facilities available at Stanford University, produced by decades of patient federal support and also visionary campus planning and generous private donations. When Michael Lustig came to campus in the early 2000s as a graduate student of electrical engineering professor John Pauly, his office in Packard was less than a hundred yards away from the statistics department in Sequoia Hall, maybe 100 yards away from a GE-donated high-field MR research scanner, and maybe 300 yards away from a medical MRI research facility at Stanford Medical school. In a few hours, Lustig could literally engage in impromptu conversations about high-dimensional geometry with mathematical scientists, about specific MR pulse sequences with electrical engineers, and about specific possible clinical trials with doctors at Stanford Hospital. This tremendous concentration of resources allowed him in his thesis to produce results that inspired many MRI researchers to pursue compressed sensing-inspired research programs.[5] Today’s Federal Funding model supported the development of such concentrated facilities and

talent over decades of patient investment; the model has delivered.

The cost-benefit ratio of mathematical research has been off-scale. The federal government spends about $250 million per year on mathematics research. Yet in the US there are 40 million MRI scans per year, incurring tens of billions in Medicaid, Medicare, and other federal costs. The financial benefits of the roughly 10-to-1 productivity improvements now being seen in MRI could soon far exceed the annual NSF budget for mathematics research.

ACKNOWLEDGMENT. There are by now literally thousands of papers in some way concerned with compressed sensing. It’s impossible to mention all the contributions that deserve note. I’ve mentioned here a select few that I could tie into the theme of a Congressional Lunch on June 28, 2017.

Thanks to Michael Lustig, PhD (University of California—Berkeley), Edgar Mueller (Siemens Healthineers), Jason Polzin, PhD (GE Global Research), and Shreyas Vasanawala MD (Stanford University), for patient instruction and clarifying explanation about MRI applications. Thanks to Ron Avitzur and Andrew Donoho for help with Pacific Tech Graphing Calculator Scripts used for the above figures and for movies that were used in the briefing. Thanks to Emmanuel Candès (Stanford University) and David Eisenbud (MSRI) for helpful guidance.

[1] See the first item of “Inside the AMS” in the September 2017 Notices.

[2] Here we really mean l1 norm. I figured that since Senator Charles Schumer would be present, we really should have a way to connect the proceedings to New York State!

[3] Actually many other ways have arisen to understand these results, using sophisticated ideas from metric geometry to information theory. I would mention for example work of Roman Vershynin, Ben Recht and collaborators, and of Mihailo Stojnic. But in a brief presentation for Congressional staffers, I couldn’t mention all the great work being done.

[4] Of course Crow Flight metric means standard Euclidean or l2 distance.

[5] Lustig credits many other MRI researchers with decisive contributions in bringing CS into MR Imaging. He says: “I would emphasize the contributions of Tobias Block pubmed/17534903 and Ricardo Otazo (with Daniel Sodickson) pubmed/20535813. These guys have taken the clinical translation of Compressed Sensing orders of magnitude forward.” He also mentions key contributions of Zhi-Pei Lian of UIUC, Joshua Trzasko of Mayo Clinic, and of Alexey Samsonov and Julia Velikina of UW Madison. It seems that federal funding was important in each case.